TEGRA Integration Services

With the Tegra services we provide an integration layer for our cognitive technologies that can be consumed through a RESTful API by any application or service. Tegra is easy to install, deploy and manage either on dedicated servers or through Docker and Kubernetes. Arbitrary scaling is provided through Tegra workers that can be started and put to sleep again based on current demand. Tegra integrates OCR, Classification and Extraction into any legacy application through direct API calls or via readily available RPA integrations.

Tegra is intended for partners and end users that already have an existing platform to manage their data and processes and want to enhance this with intelligent cognitive functions without the need of a tedious SDK integration.

TEGRA Concept

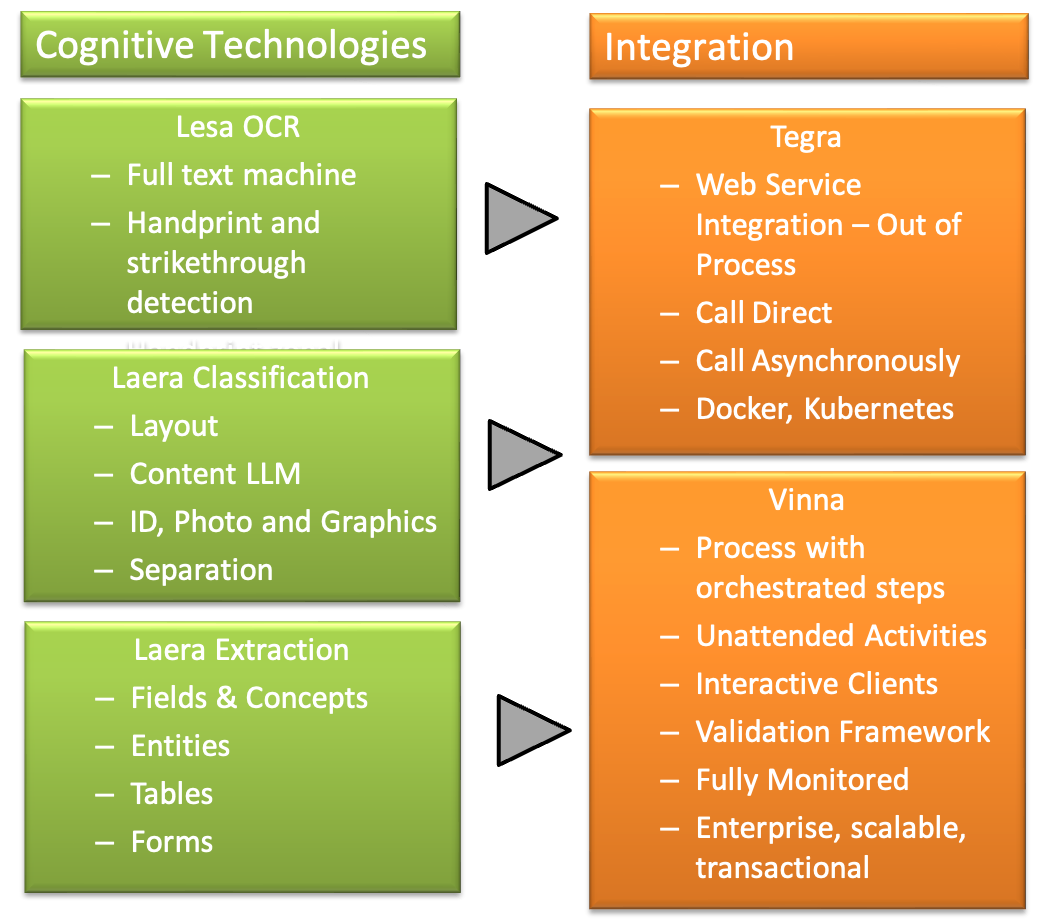

Skilja’s intelligent AI and cognitive technologies are developed independently from any platform. Cognitive technologies are

- LESA AI OCR

- LAERA Classifier

- LAERA Extraction

Classification and Extraction come with their own designers, that allow also non-technical users to quickly set up the recognition, train it, refine it, test it and then save it as a self consistent model. LESA is included in the designers for free.

The execution of the model and the defined algorithms are then performed by runtime libraries. These are integrated into the TEGRA services – from where they can be used through a documented RESTful API – or as activities in VINNA. As the model and the libraries are identical the execution can happen in either integration with the same result – integration can even be mixed based on the customer requirement by calling TEGRA from a VINNA activity.

TEGRA Architecture

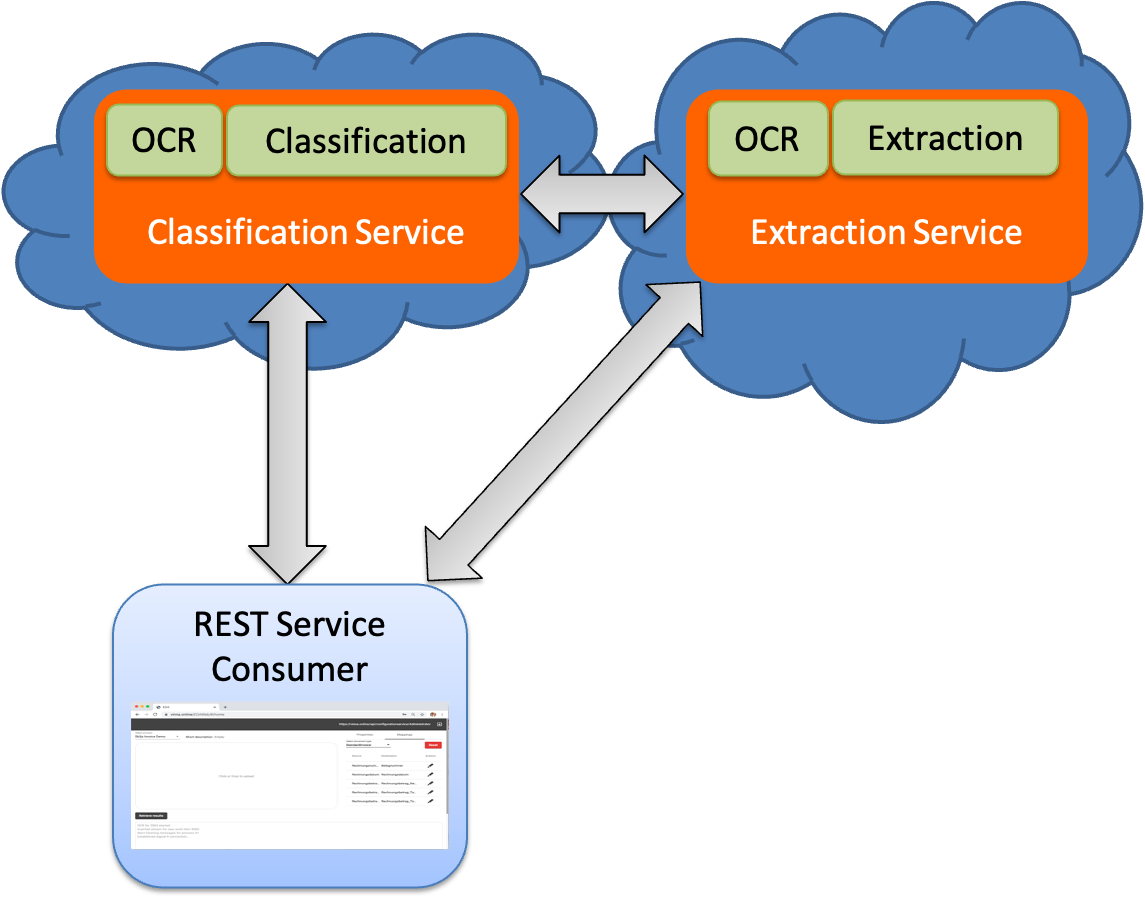

TEGRA consists of two services (one for OCR + classification and one for OCR+ extraction). These services encapsulate the cognitive algorithms in an easy to use way.

For starting upload a document via the RESTful API to the service and choose the project and the pre-trained model to be used for classification and extraction. The model has been generated before using the Designers and stored in a database. Both services have access to this database and load the corresponding models on demand. Models are versioned and secured. If no connection to the database is allowed then the project and the model can also be uploaded directly through the API. Documents can be uploaded in a variety of formats, including all bitmap formats (JPG, TIF, PNG,…) and PDF.

Processing is then performed asynchronously. Through this a big bunch of documents can be uploaded at once, they will be put into a queue based on priority, urgency and other criteria. When a document is finished the consumer gets notified or can poll the service for the status. In the last step the consumer simply downloads the results as structured JSON file, plain text and optionally as PDF with full text layer.

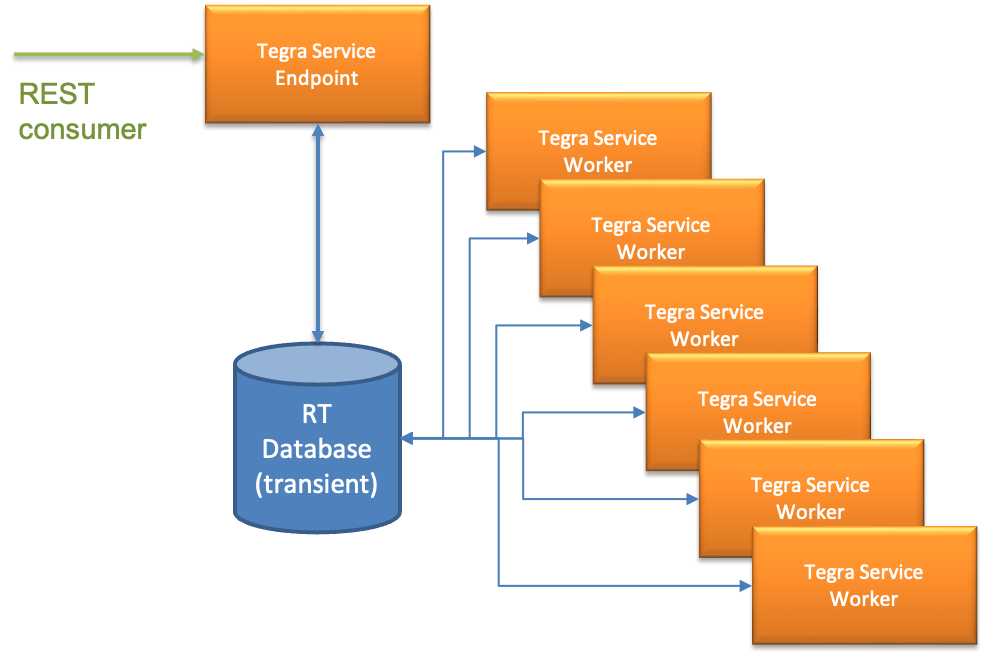

TEGRA Scalability

TEGRA services can scaled almost arbitrarily. One of the services is used as a service endpoint to be accessed by the consumer through the API. This service stores the document into a transient database for queuing. Supported databases are PostGreSQL, MS-SQL and Oracle. The other services pick up the workload from the database and individually process the documents based on priority. The project and model to be used is loaded on demand by each TEGRA worker based on the request from the consumer.

By default OCR is performed in the first step. Optionally the API also allows to upload existing OCR results (if available) or to use the text layer of a PDF to avoid this time consuming step. Also TEGRA classification service can communicate directly with TEGRA extraction service and provide classification results and already available text layer from LESA AI OCR for the extraction.

All services are available in a Docker container, run under Windows and Linux and may be easily deployed anywhere in any cloud.