Humans have a remarkable capability to compensate for noisy signal and incomplete information. We are able to distinguish and recognize relevant information even when the signal to noise ratio is extremely low. Missing data is reconstructed from context knowledge or simply by guessing. Sometimes guessing is wrong – which is used to demonstrate effects in sensory illusions but in most cases it is very accurate.

Compensation and fault tolerance are most remarkable for our visual sense. This is not surprising as eyesight is our primary sense and utterly important for survival in the jungle as well as in a modern city. Humans can distinguish features at very low light with very low contrast and even reconstruct 3d objects from almost any angle of view. Visual sense is the first step for cognition and corresponds to creating a digital image in document capture which today is mostly done through scanners but more and more also with cameras especially in mobile devices. A lot of effort has been made to improve the quality of these digital images in the past to account for the obvious ability of a human to read almost anything. Humans can easily adapt to a wide range of contrast and colors, they have no problem ever to distinguish between foregrounds (written information) from preprinted background. They are not disturbed by stains, punch holes, distorted or crumpled pages – they simply compensate. I know from own experience how difficult it is to mimic only part of this capabilities in software and how far we are from what every human eyeball can do.

But fault tolerance does not stop at the imaging step. Our mental system is able to apply similar methodology also to printed information. This can also be faulty. Often text that has been recognized by OCR contains errors due to the problems in image quality named above. But often these errors also result from typos or abbreviations.

Our cognitive system does a remarkable job in making sense out of distorted information when it can put it into context. A famous text to demonstrate this is shown below. You can read this can’t you?

“Aoccdrnig to a rscheearch at Cmabrigde Uinervtisy, it deosn’t mttaer in waht oredr the ltteers in a wrod are, the olny iprmoetnt tihng is taht the frist and lsat ltteer be at the rghit pclae. The rset can be a toatl mses and you can sitll raed it wouthit porbelm. Tihs is bcuseae the huamn mnid deos not raed ervey lteter by istlef, but the wrod as a wlohe.”

This text circulated in the internet since September 2003. It simply randomizes the characters in the middle, leaving the first and last letter unchanged. You can compensate easily in your mind for this.

The origin of the text is by the way not in Cambridge as it appears but can be tracked down to the PhD of Graham Rawlinson at Nottingham University (1976, referenced here) which showed that randomizing letters in the middle of words had little or no effect on the ability of skilled readers to understand the text.

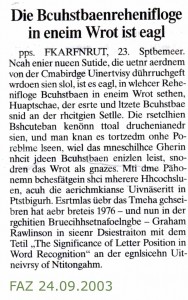

Interestingly this works as well in other languages as shown in the article from Frankfurter Allgemeine Zeitung from 2003, which by the way also cites the correct author Rawlinson.

Matt Davis of the MRC Cognition and Brain Sciences Unit at Cambridge University did some work around this now quite famous Internet Meme. They created a page with examples in all kind of different languages as well as some discussion and explanation from the – University of Cambridge.

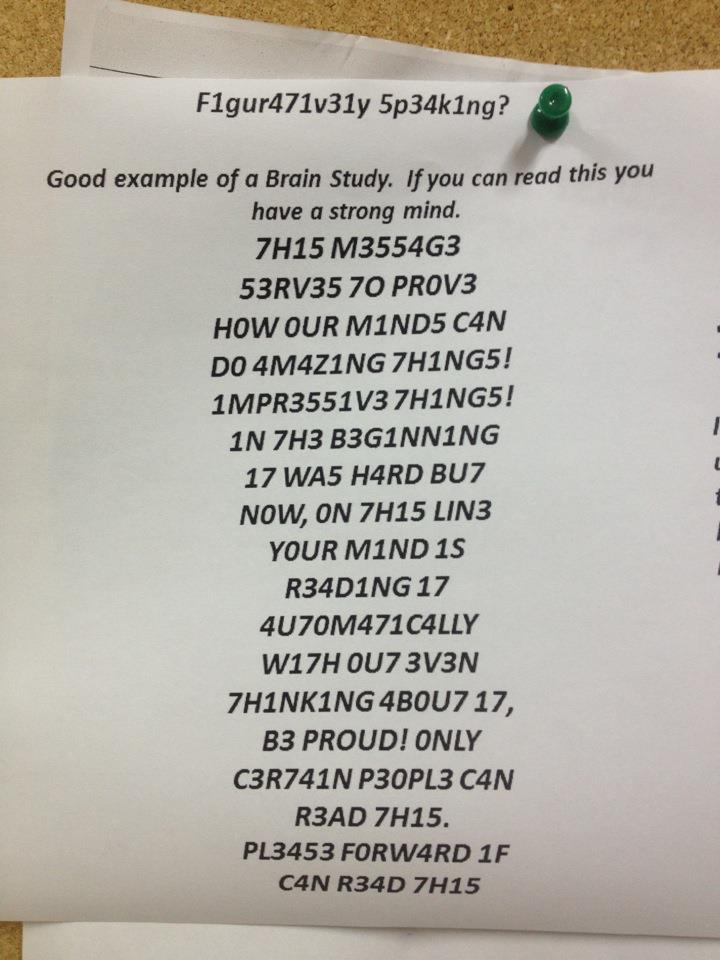

Recently another puzzle “Figuratively Speaking” was floating over the net. I was not able to track the source down, but it is too striking not to be mentioned. It shows another jumbled text which is much more difficult to read. And still most people can do it.

If you are unable to make sense of it – don’t despair, there seem to be more people out there. Simply search for “This message serves to prove…” or look it up here: http://answers.yahoo.com/question/index?qid=20110831113639AAxI3zL

In this case some letters have been consistently replaced by numbers throughout the text. Our brain is able to detect this pattern quickly and once adapted reads it with ease.

In document understanding these capabilities are simulated using fuzzy matching and associative lookup. First the known words of a language can be matched with a dictionary to identify their meaning. Fuzzy means that a certain fault tolerance (deviation of one or two characters) is accepted as long as the matched word is clear without ambiguity. All major OCR engines have this kind of dictionary match built in.

For more complex data structures like an address the associative lookup offers to possibility to match the incomplete data with a complete stored record. If the result of the lookup is unambiguous and confident enough the faults can be repaired. Spelling errors in the name of the city can be corrected if the zip code and the street name are used to match the complete address. In an upcoming post we will look into associative matching and the results that can be expected.

In the end we are left with a similar situation as with imaging: While software can mimic some functionality of human cognitive capabilities it is far from achieving the same amazing results as you have experienced yourself in the examples given above.